Build a RAG-based QnA application using Llama3 models from SageMaker JumpStart

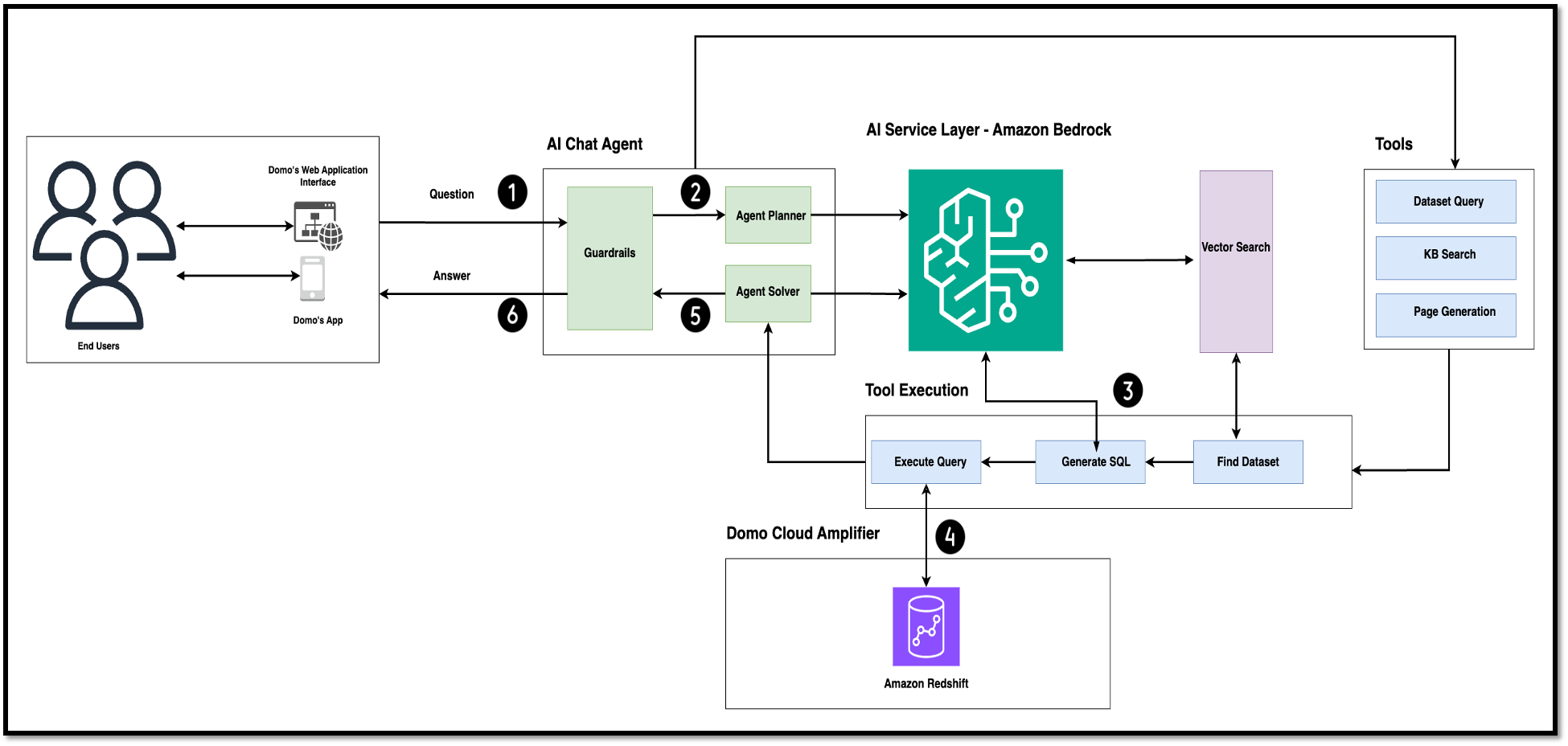

Organizations generate vast amounts of data that is proprietary to them, and it’s critical to get insights out of the data for better business outcomes. Generative AI and foundation models (FMs) play an important role in creating applications using an organization’s data that improve customer experiences and employee productivity. The FMs are typically pretrained on … Read more