AWS Inferentia and AWS Trainium deliver lowest cost to deploy Llama 3 models in Amazon SageMaker JumpStart

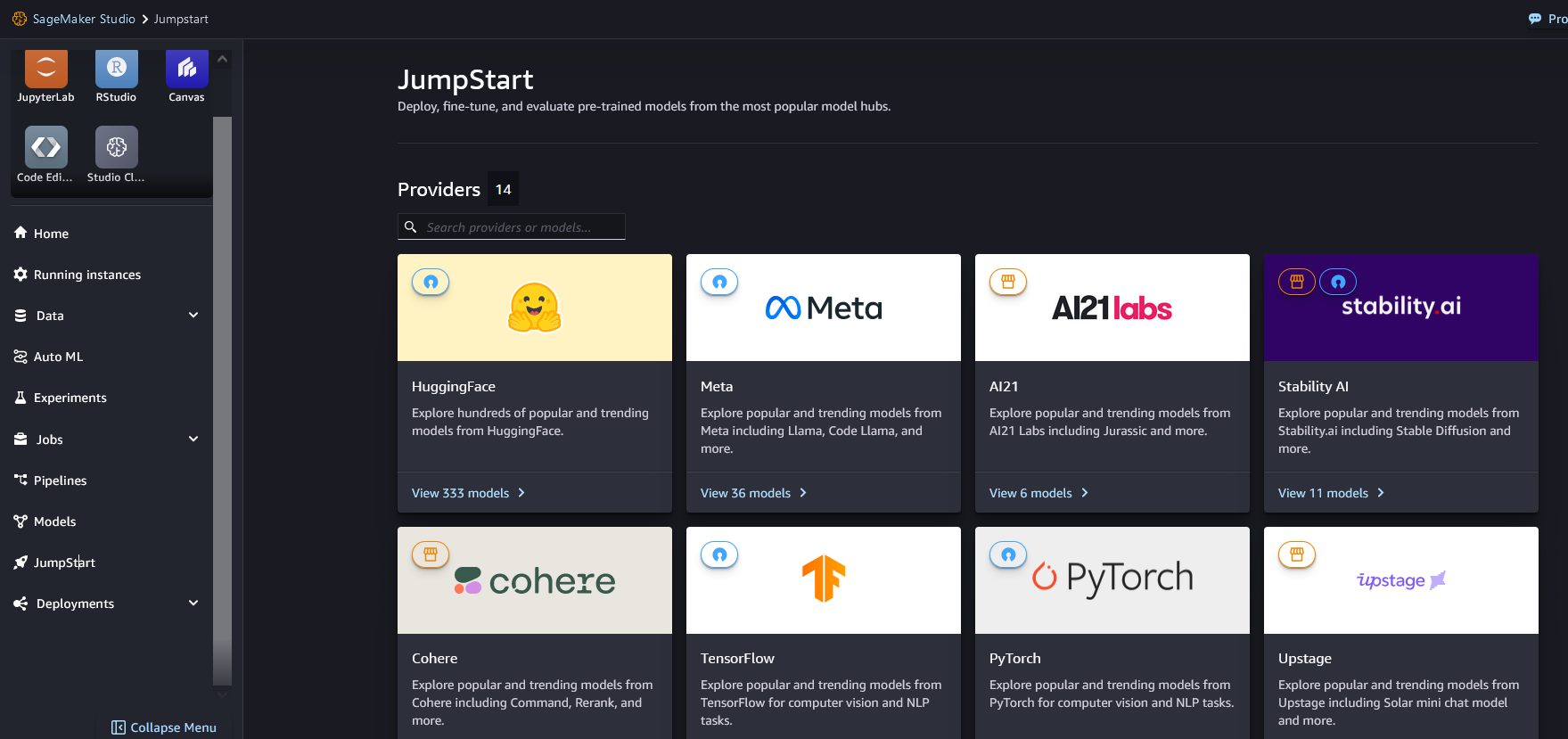

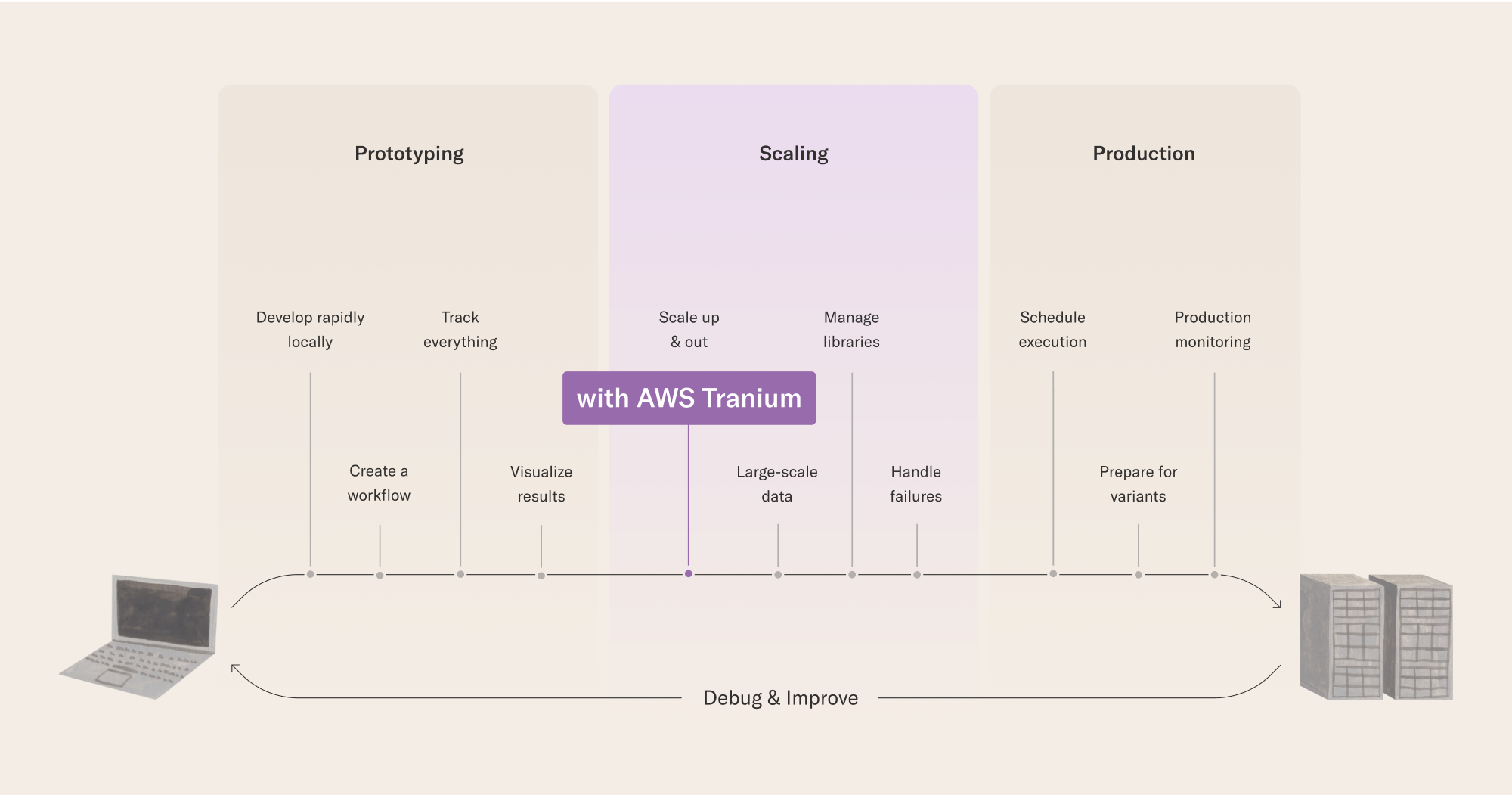

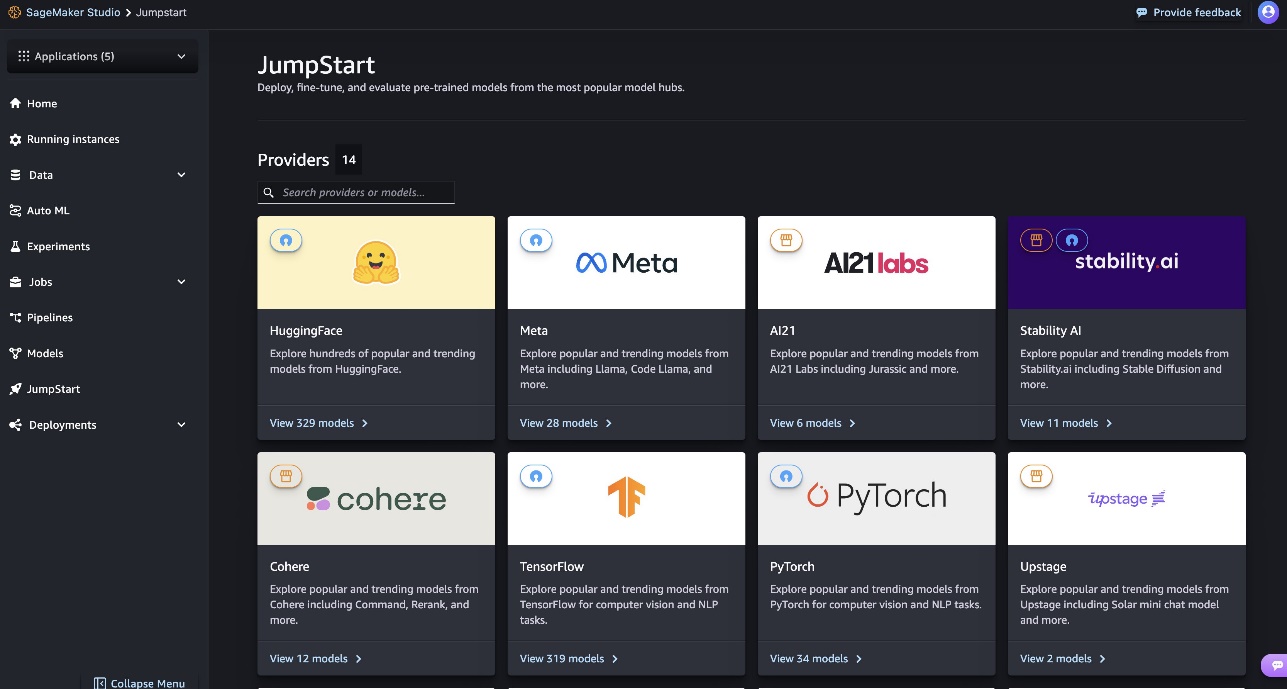

Today, we’re excited to announce the availability of Meta Llama 3 inference on AWS Trainium and AWS Inferentia based instances in Amazon SageMaker JumpStart. The Meta Llama 3 models are a collection of pre-trained and fine-tuned generative text models. Amazon Elastic Compute Cloud (Amazon EC2) Trn1 and Inf2 instances, powered by AWS Trainium and AWS … Read more