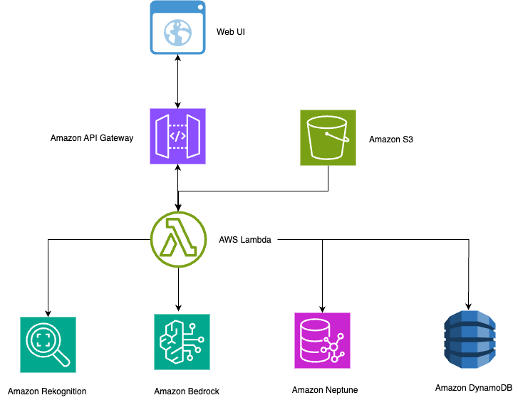

Build an intelligent photo search using Amazon Rekognition, Amazon Neptune, and Amazon Bedrock

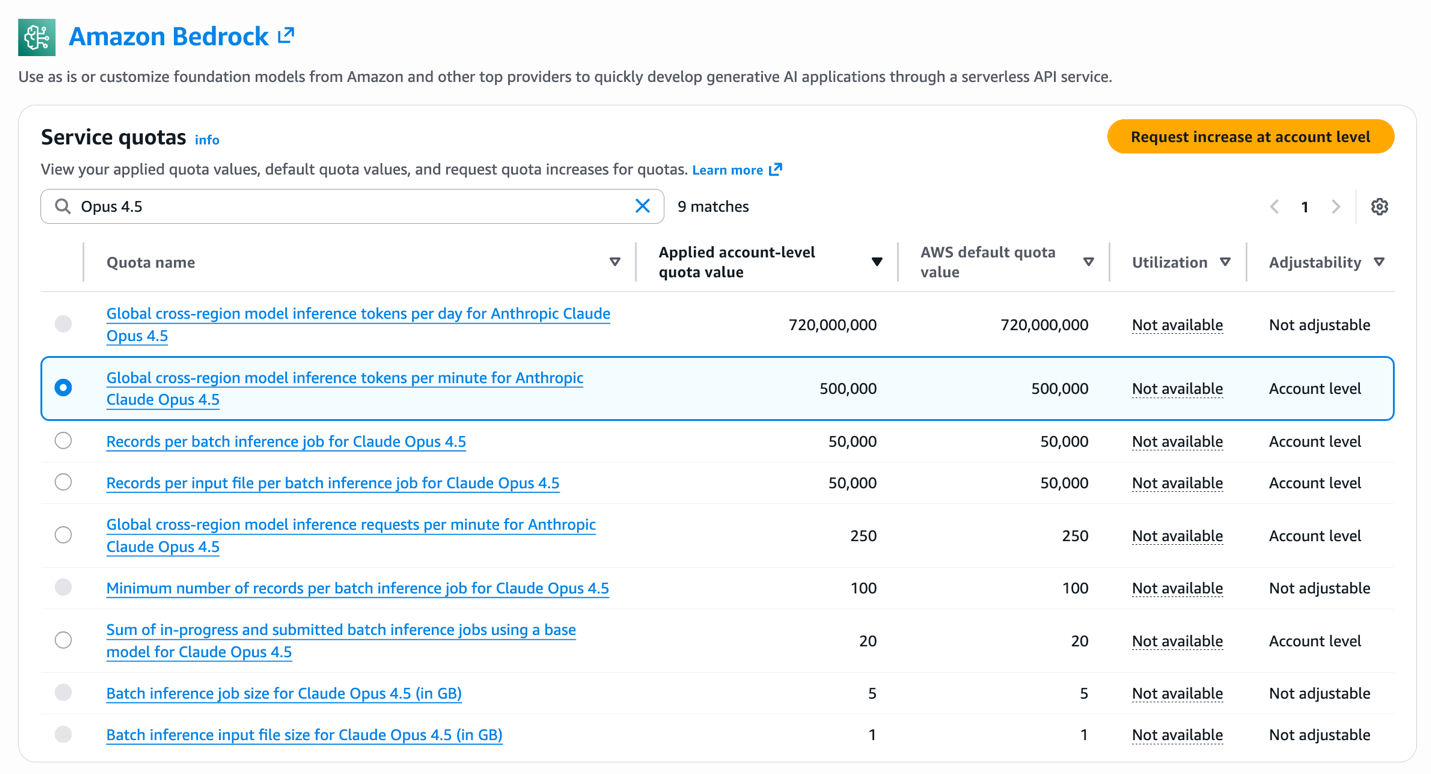

Managing large photo collections presents significant challenges for organizations and individuals. Traditional approaches rely on manual tagging, basic metadata, and folder-based organization, which can become impractical when dealing with thousands of images containing multiple people and complex relationships. Intelligent photo search systems address these challenges by combining computer vision, graph databases, and natural language processing … Read more