Table of Links

Supplementary Material

-

Image matting

-

Video matting

8. Image matting

This section expands on the image matting process, providing additional insights into dataset generation and comprehensive comparisons with existing methods. We delve into the creation of I-HIM50K and M-HIM2K datasets, offer detailed quantitative analyses, and present further qualitative results to underscore the effectiveness of our approach.

8.1. Dataset generation and preparation

The I-HIM50K dataset was synthesized from the HHM50K [50] dataset, which is known for its extensive collection of human image mattes. We employed a MaskRCNN [14] Resnet-50 FPN 3x model, trained on the COCO dataset, to filter out single-person images, resulting in a subset of 35,053 images. Following the InstMatt [49] methodology, these images were composited against diverse backgrounds from the BG20K [29] dataset, creating multi-instance scenarios with 2-5 subjects per image. The subjects were resized and positioned to maintain a realistic scale and avoid excessive overlap, as indicated by instance IoUs not exceeding 30%. This process yielded 49,737 images, averaging 2.28 instances per image. During training, guidance masks were generated by binarizing the alpha mattes and applying random dropout, dilation, and erosion operations. Sample images from I-HIM50K are displayed in Fig. 10.

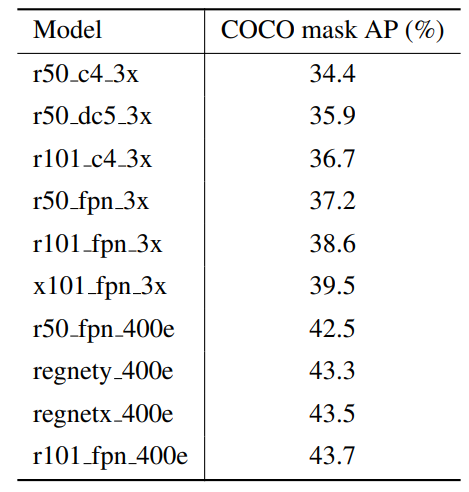

The M-HIM2K dataset was designed to test model robustness against varying mask qualities. It comprises ten masks per instance, generated using various MaskRCNN models. More information about models used for this generation process is shown in Table 8. The masks were matched to instances based on the highest IoU with the ground truth alpha mattes, ensuring a minimum IoU threshold of 70%. Masks that did not meet this threshold were artificially generated from ground truth. This process resulted in a comprehensive set of 134,240 masks, with 117,660 for composite and 16,600 for natural images, providing a robust benchmark for evaluating masked guided instance matting. The full dataset I-HIM50K and M-HIM2K will be released after the acceptance of this work.

:::info

Authors:

(1) Chuong Huynh, University of Maryland, College Park (chuonghm@cs.umd.edu);

(2) Seoung Wug Oh, Adobe Research (seoh,jolee@adobe.com);

(3) Abhinav Shrivastava, University of Maryland, College Park (abhinav@cs.umd.edu);

(4) Joon-Young Lee, Adobe Research (jolee@adobe.com).

:::

:::info

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::