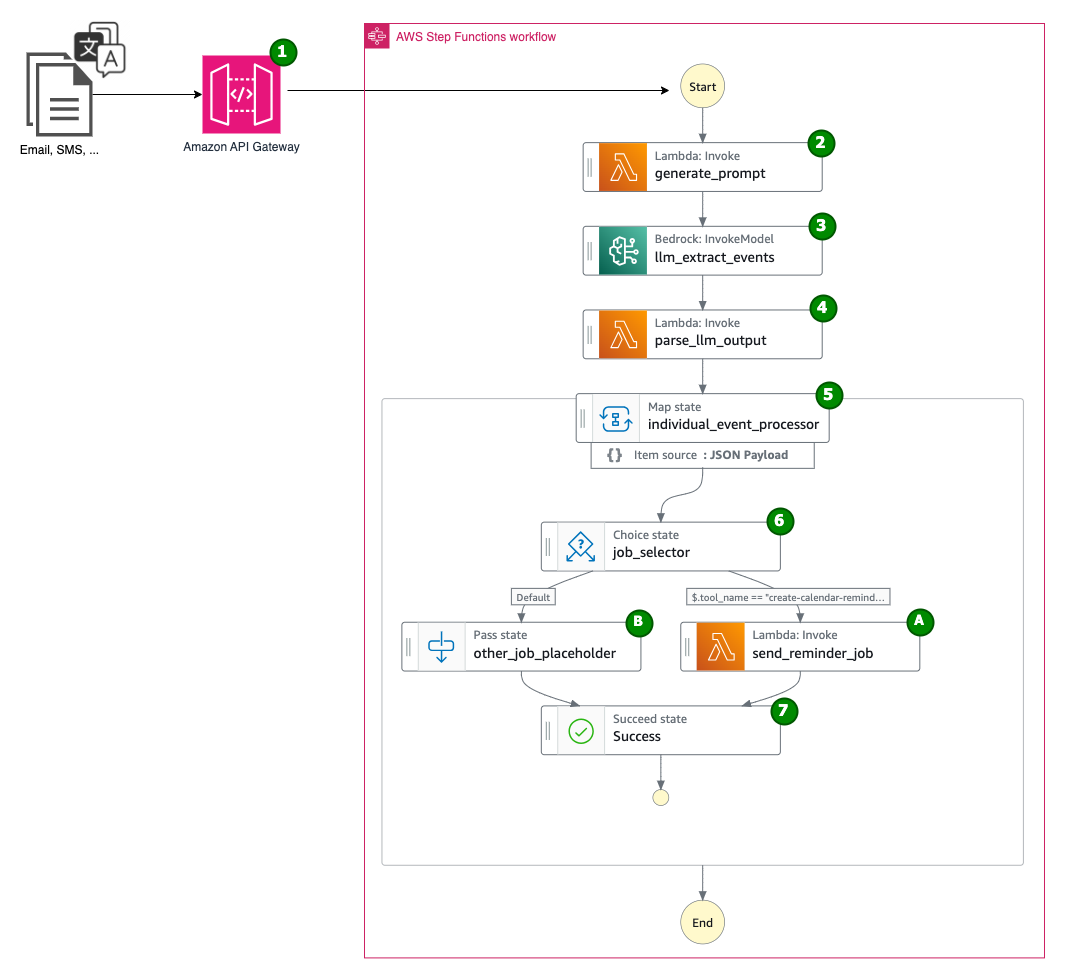

Automating model customization in Amazon Bedrock with AWS Step Functions workflow

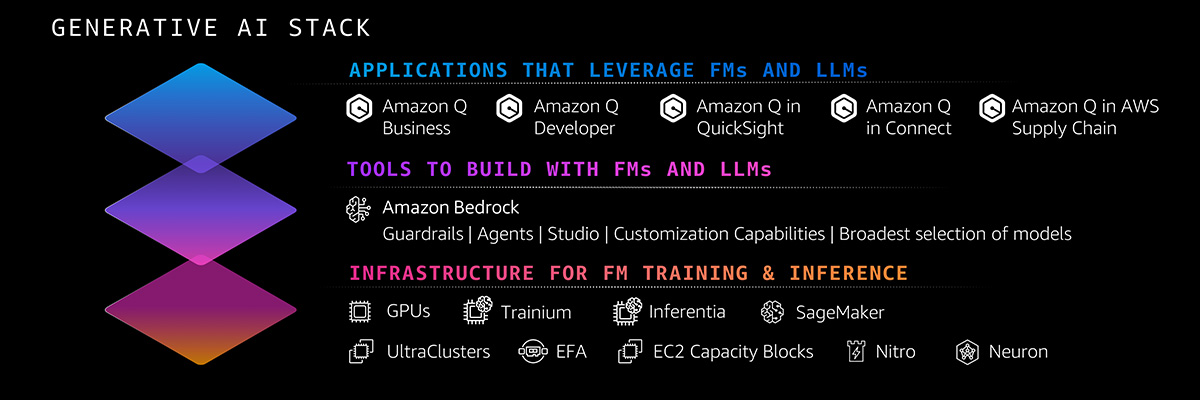

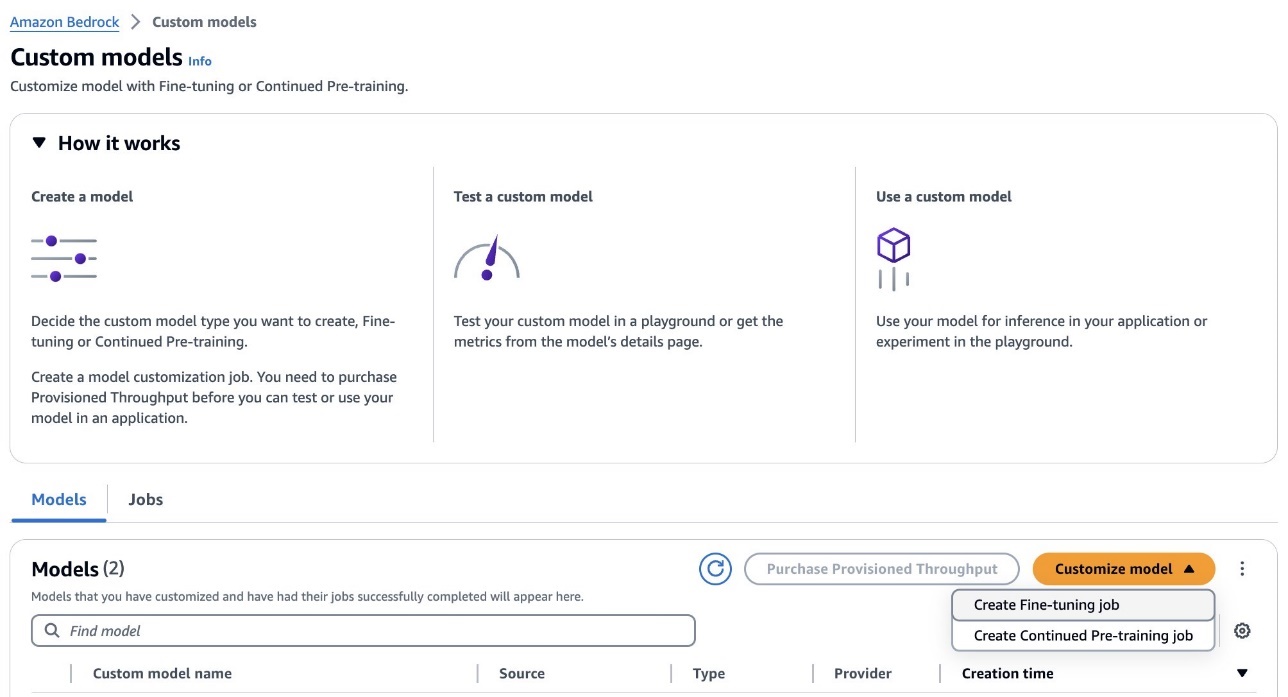

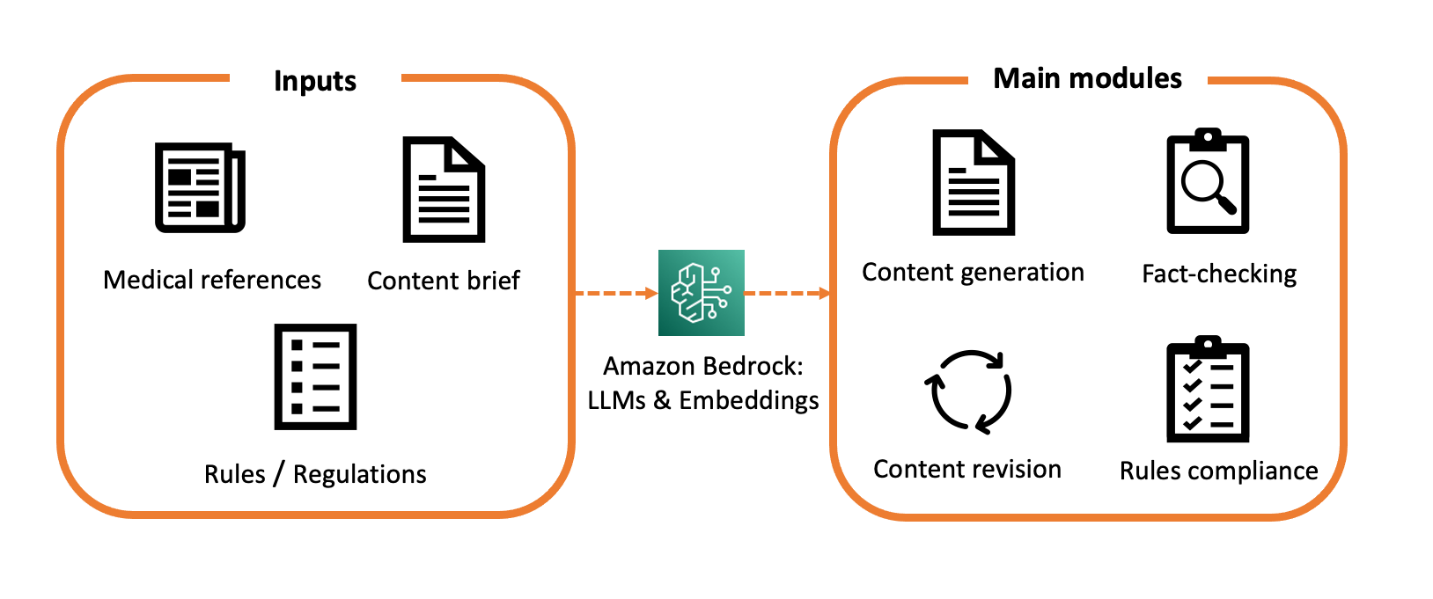

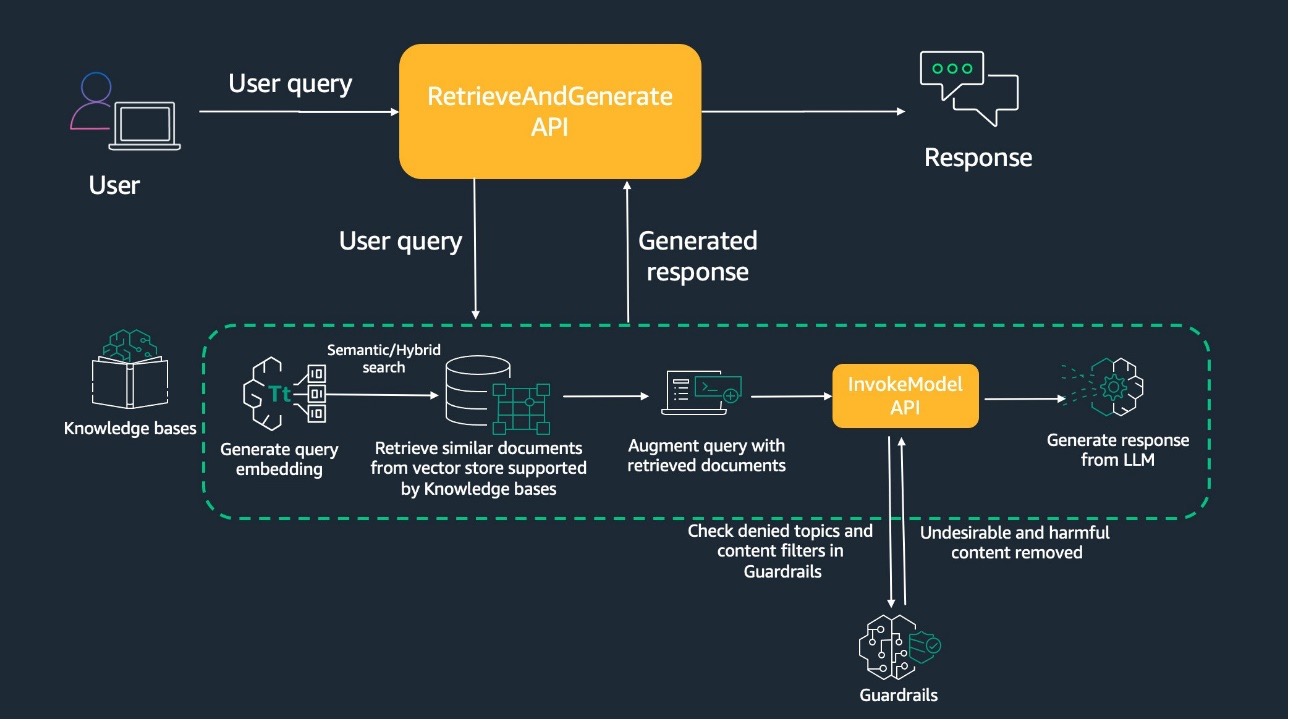

Large language models have become indispensable in generating intelligent and nuanced responses across a wide variety of business use cases. However, enterprises often have unique data and use cases that require customizing large language models beyond their out-of-the-box capabilities. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) … Read more