Table of Links

3 Preliminaries

3.1 Fair Supervised Learning and 3.2 Fairness Criteria

3.3 Dependence Measures for Fair Supervised Learning

4 Inductive Biases of DP-based Fair Supervised Learning

4.1 Extending the Theoretical Results to Randomized Prediction Rule

5 A Distributionally Robust Optimization Approach to DP-based Fair Learning

6 Numerical Results

6.2 Inductive Biases of Models trained in DP-based Fair Learning

6.3 DP-based Fair Classification in Heterogeneous Federated Learning

Appendix B Additional Results for Image Dataset

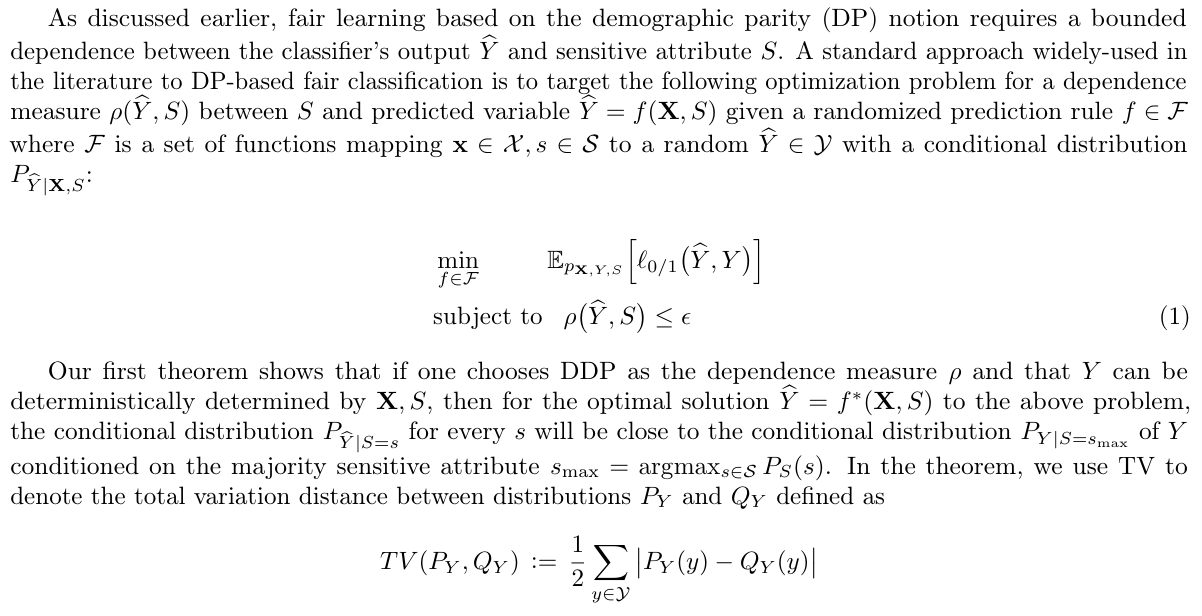

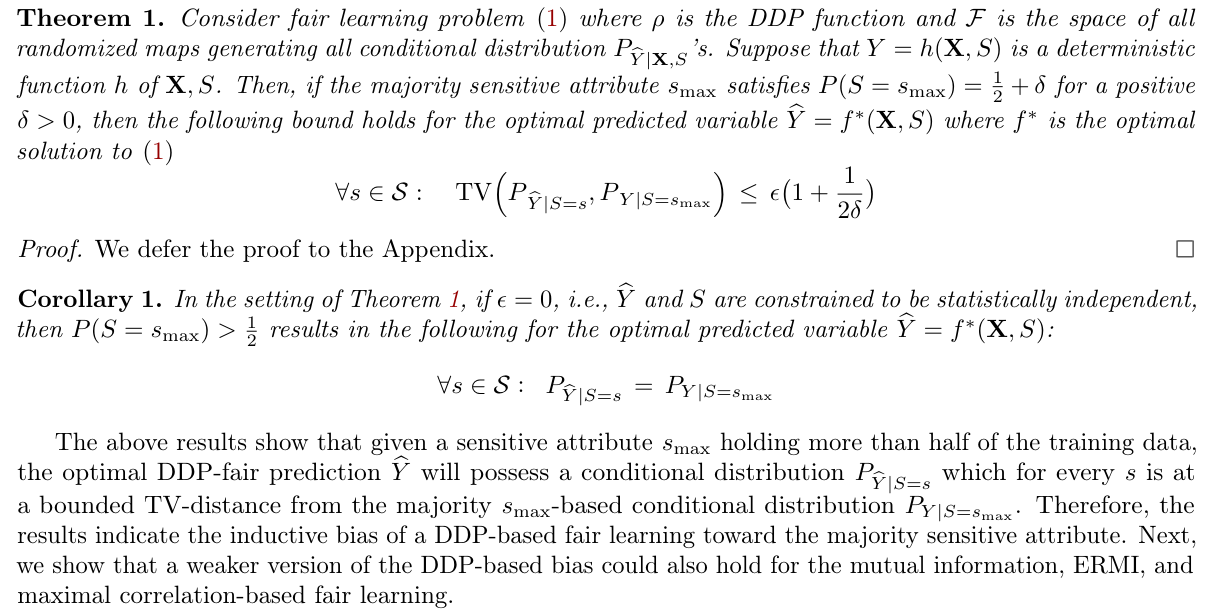

4 Inductive Biases of DP-based Fair Supervised Learning

Proof. We defer the proof to the Appendix

We remark the difference between the bias levels shown for the DDP case in Theorem 1 and the other dependence metrics in Theorem 2. The bias level for a DDP-based fair leaner could be considerably stronger than that of mutual information, ERMI, and maximal correlation-based fair learners, as the maximum the total variations in Theorem 1 is replaced by their expected value over PS in Theorem 2.

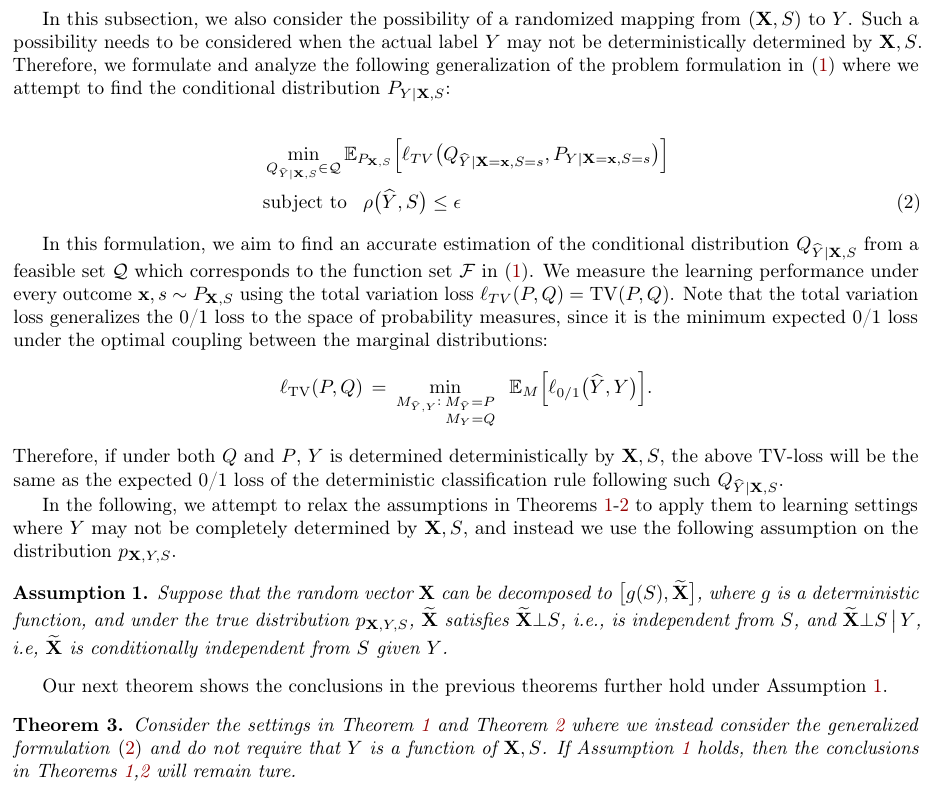

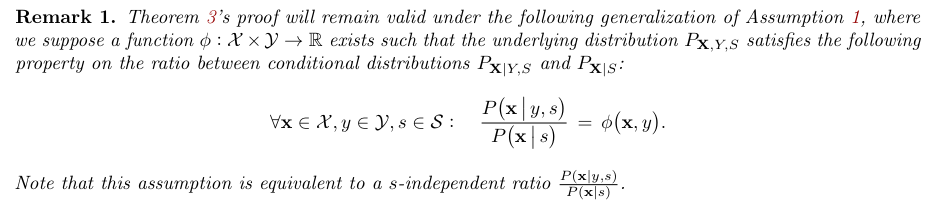

4.1 Extending the Theoretical Results to Randomized Prediction Rule

Proof. We defer the proof to the Appendix.

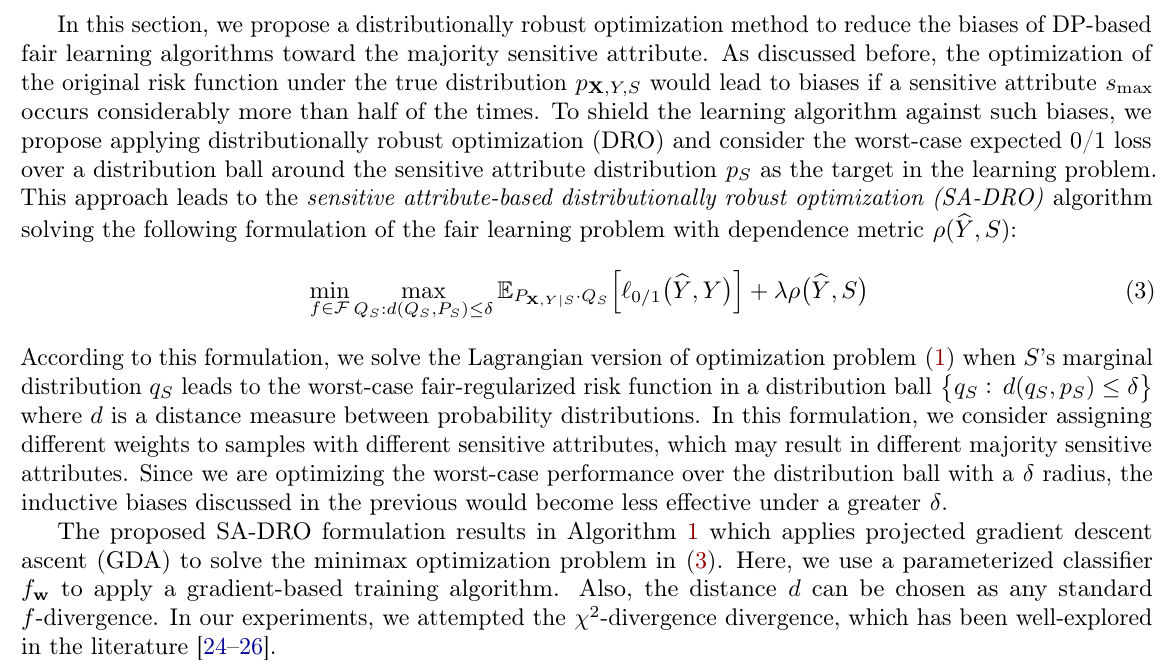

5 A Distributionally Robust Optimization Approach to DP-based Fair Learning

:::info

This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.

:::

:::info

Authors:

(1) Haoyu LEI, Department of Computer Science and Engineering, The Chinese University of Hong Kong (hylei22@cse.cuhk.edu.hk);

(2) Amin Gohari, Department of Information Engineering, The Chinese University of Hong Kong (agohari@ie.cuhk.edu.hk);

(3) Farzan Farnia, Department of Computer Science and Engineering, The Chinese University of Hong Kong (farnia@cse.cuhk.edu.hk).

:::