Month: April 2024

What Is Wrong With Token Rewards And How to Fix It

In recent years, token rewards have become a popular mechanism for incentivizing various behaviors, ranging from participation in online communities to driving specific actions within decentralized networks. However, despite their widespread adoption, token reward systems often encounter significant challenges and criticisms. From issues of sustainability to concerns about fairness and effectiveness, several key problems need … Read more

‘Lost’ Yuga Labs restructures again, with layoffs, new executive

The creator of the Bored Ape Yacht Club has been struggling with a changing market and still plans to focus on its Otherside metaverse project.

“How Many Colors Can the Human Eye See?”: The Application

How many colors can the human eye see? A thousand? A million? And we are not talking about the quantity that you can name – but about the colors that a person can see and compare. In this article, we will begin to develop a prototype tool that could be used to answer this question. … Read more

Sam Altman, Satya Nadella Join High-Powered AI Safety Board for Homeland Security

Twenty leading AI developers—including OpenAI, Microsoft, NVIDIA, and Google—pledge to help the US Department of Homeland Security protect critical infrastructure.

Working Together From Afar: Easy Strategies for Remote Team Collaboration

I’m excited to share with you a few extremely good easy and effective ways to work collectively as a group, even when we’re miles apart. Remote pairings are becoming more common in recent times, and it is essential to have the right techniques in location to hold matters easily. So, let’s dive into some easy … Read more

Photo-sharing community EyeEm will license users’ photos to train AI if they don’t delete them

EyeEm, the Berlin-based photo-sharing community that exited last year to Spanish company Freepik after going bankrupt, is now licensing its users’ photos to train AI models. Earlier this month, the company informed users via email that it was adding a new clause to its Terms & Conditions that would grant it the rights to upload … Read more

Navigating Complex Search Tasks with AI Copilots: The Undiscovered Country and References

:::info This paper is available on arxiv under CC 4.0 license. Authors: (1) Ryen W. White, Microsoft Research, Redmond, WA, USA. ::: Table of Links Abstract and Taking Search to task AI Copilots Challenges Opportunities The Undiscovered Country and References 5 THE UNDISCOVERED COUNTRY AI copilots will transform how we search. Tasks are central to … Read more

Crypto Biz: X payment system, Block moves into Bitcoin mining and more

This week’s Crypto Biz examines X’s upcoming payment system, the NYSE’s potential 24/7 trading, Block’s expansion into Bitcoin mining, and more.

John Deaton files amicus brief in support of Coinbase appeal against SEC

The lawyer said he had filed a brief on behalf of 4,701 Coinbase customers for no charge as part of his advocacy work in the crypto space.

EU DeFi regulations set to welcome big banks, challenge crypto natives

New rules under the MiCA framework may encourage big banks to enter the DeFi space, potentially complicating compliance for native crypto projects.

How I search in 2024

Comments

Google is officially a $2 trillion company

Illustration: The Verge Google has spent the past year dealing with two of the biggest threats in its 25-year history: the rise of generative AI and the growing drumbeat of regulation. AI, in particular, has shaken the company to its core: it’s made big search changes, realigned the Search, Android, and hardware teams around AI, … Read more

Meta AI tested: Doesn’t quite justify its own existence, but free is free

Meta’s new large language model, Llama 3, powers the imaginatively named “Meta AI,” a newish chatbot that the social media and advertising company has installed in as many of its apps and interfaces as possible. How does this model stack up against other all-purpose conversational AIs? It tends to regurgitate a lot of web search … Read more

‘Notcoin’ Game Token Is Launching Soon—Here’s How the Claim Works

The NOT token is dropping in a matter of days, Notcoin’s co-creator told Decrypt’s GG. Here’s what to expect from the claim process.

Biden’s Homeland Security team taps tech elite for AI defense board

The board includes the CEOs of Adobe, Alphabet, Anthropic, AMD, AWS, IBM, Microsoft and Nvidia, as well as other business, civil rights and academic leaders.

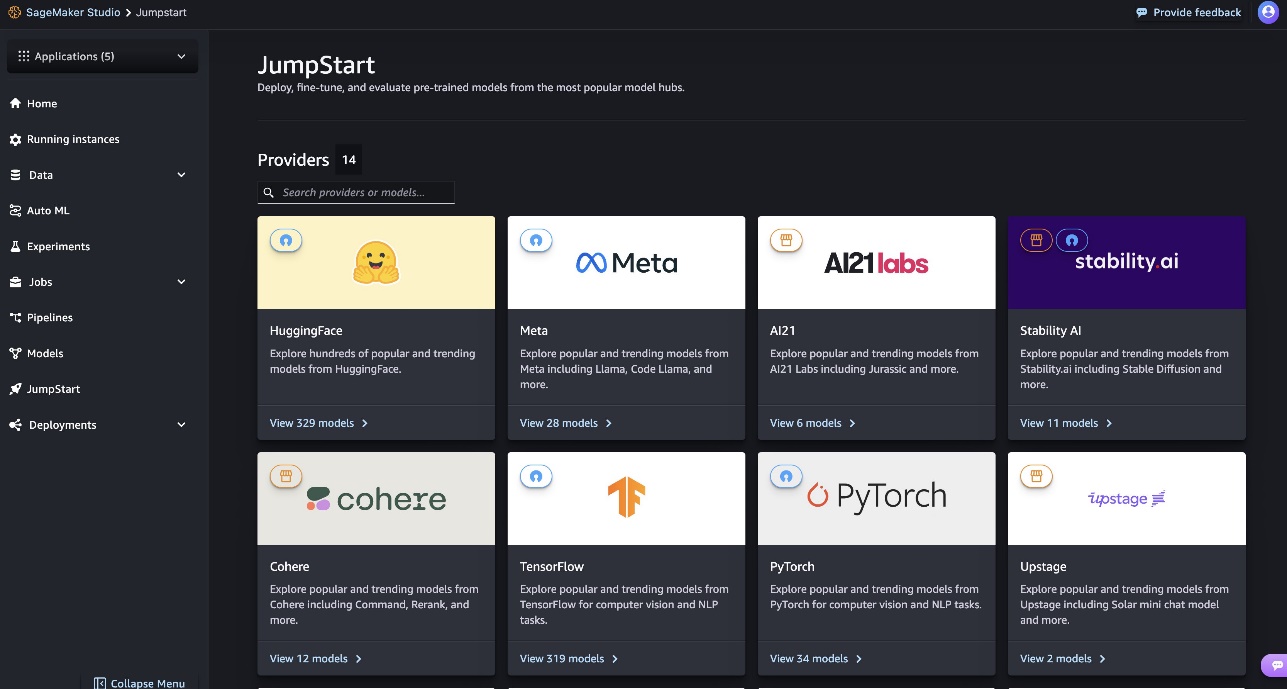

Databricks DBRX is now available in Amazon SageMaker JumpStart

Today, we are excited to announce that the DBRX model, an open, general-purpose large language model (LLM) developed by Databricks, is available for customers through Amazon SageMaker JumpStart to deploy with one click for running inference. The DBRX LLM employs a fine-grained mixture-of-experts (MoE) architecture, pre-trained on 12 trillion tokens of carefully curated data and … Read more

Apple Releases 8 Small AI Language Models To Compete With Microsoft’s Phi-3

Competition in the AI space has started to shift from building the largest models to the smallest, most capable tools.